BeingThere Centre UNC

International Research Centre for Tele-Presence and Tele-Collaboration

Videos

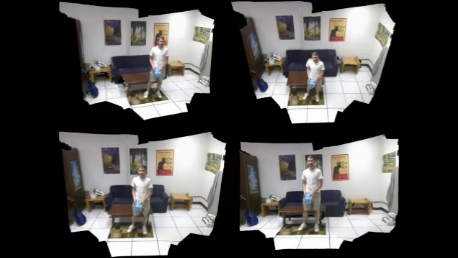

Real-Time Volumetric 3D Capture of Room-Sized Scenes for Telepresence

Real-Time Volumetric 3D Capture of Room-Sized Scenes for Telepresence

Video corresponding to the paper “Real-Time Volumetric 3D Capture of Room-Sized Scenes for Telepresence” by A. Maimone and H. Fuchs, to appear at 3DTV-Con 2012, Zurich Switzerland, 15-17 Oct 2012. Video prepared by Andrew Maimone.

Enhanced 3D Capture of Room-sized Dynamic Scene with Commodity Depth Cameras

Enhanced 3D Capture of Room-sized Dynamic Scene with Commodity Depth Cameras

In this project, we designed a system to capture the enhanced 3D structure of a room-sized dynamic scene with commodity depth cameras, such as Microsoft Kinects. Our system incorporates temporal information to achieve a noise-free and complete 3D capture of the entire room. More specifically, we pre-scan the static parts of the room offline, and track their movements online. For the dynamic objects, we perform non-rigid alignment between frames and accumulate data over time. Our system also supports the topology changes of the objects and their interactions. Video prepared by Mingsong Dou.

Augmented Reality Telepresence with 3D Scanning and Optical See-Through HMDs